This seminar introduces the research of the Mathematical Statistics Team.

YouTube video

2021/07/07 15:00-17:00 Online Streaming via Zoom Webinar (registration required)

Mathematical Statistics Team (https://aip.riken.jp/labs/generic_tech/math_stat/) at RIKEN AIP

Speaker 1: Hidetoshi Shimodaira (30 mins)

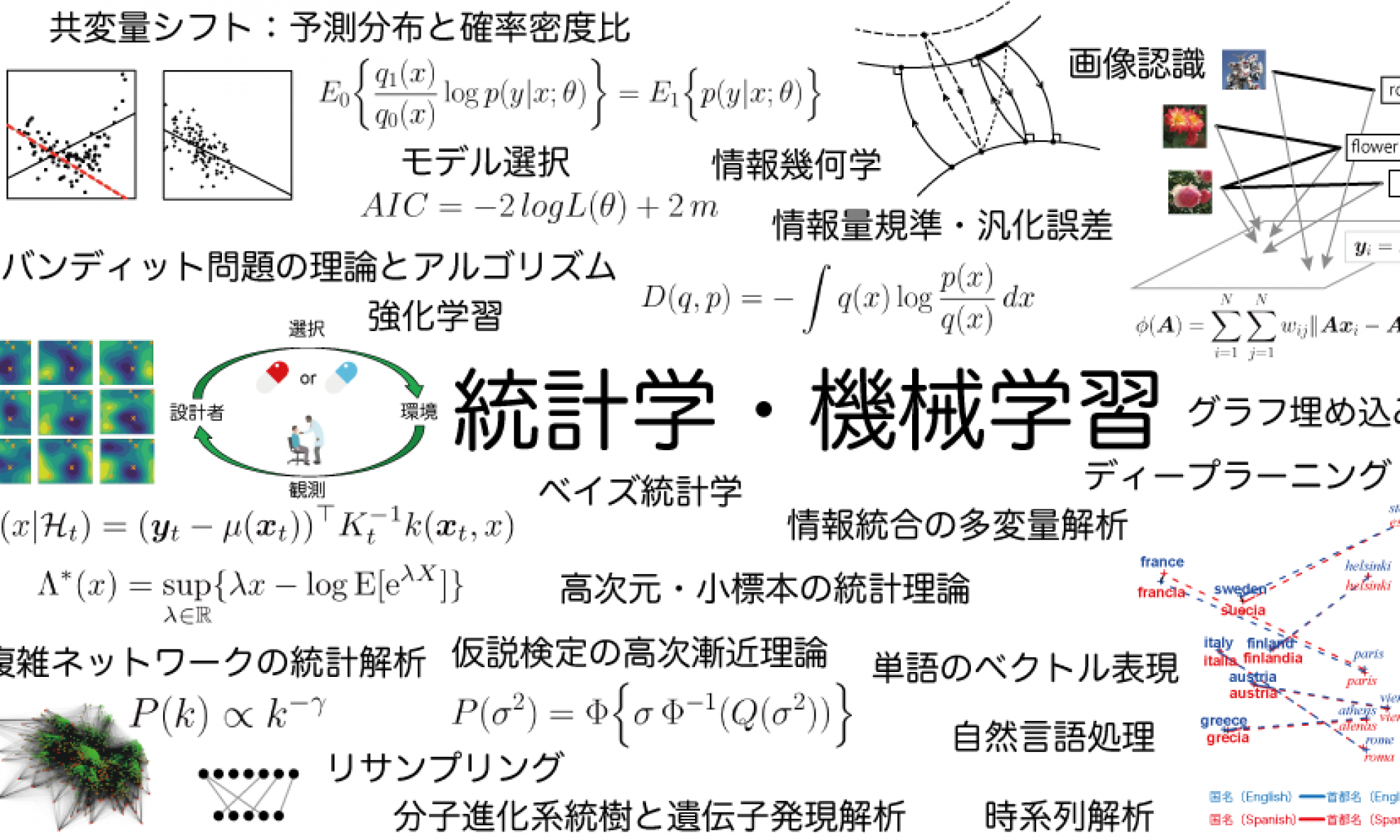

Title: Statistical Intelligence for Advanced Artificial Intelligence

Abstract: Our goal is to develop a data-driven methodology with statistical inference for artificial intelligence, which may be called “statistical intelligence.” In the first half of the talk, I overview our research topics: (1) Representation learning via graph embedding for multimodal relational data, (2) Valid inference via bootstrap resampling for many hypotheses with selection bias, (3) Statistical estimation of growth mechanism from complex networks. In the second half of the talk, I discuss a generalization of “additive compositionality” of word embedding in natural language processing. I show the computation of distributed representations for logical operations including AND, OR, and NOT, which would be a basis for implementing “advanced thinking” by AI in the future.

Speaker 2: Akifumi Okuno (30mins)

Title: Approximation Capability of Graph Embedding using Siamese Neural Network

Abstract: In this talk, we present our studies on the approximation capability of graph embedding using the Siamese neural network (NN). Whereas a prevailing line of previous works has applied the inner-product similarity (IPS) to the neural network outputs, the overall Siamese NN is limited to approximate only the positive-definite similarities. To overcome the limitation, we propose novel similarities called shifted inner product similarity (SIPS) and weighted inner product similarity (WIPS) for the siamese NN. We theoretically prove and empirically demonstrate their improved approximation capabilities.

Speaker 3: Yoshikazu Terada (30 mins)

Title: Selective inference via multiscale bootstrap and its application

Abstract: We consider a general approach to selective inference for hypothesis testing of the null hypothesis represented as an arbitrarily shaped region in the parameter space of the multivariate normal model. This approach is useful for hierarchical clustering, where confidence levels of clusters are calculated only for those appearing in the dendrogram, subject to heavy selection bias. Our computation is based on a raw confidence measure, called bootstrap probability, which is easily obtained by counting how many times the same cluster appears in bootstrap replicates of the dendrogram. We adjust the bias of the bootstrap probability by utilizing the scaling law in terms of geometric quantities of the region in the abstract parameter space, namely, signed distance and mean curvature. Although this idea has been used for non-selective inference of hierarchical clustering, its selective inference version has not been discussed in the literature. Our bias-corrected p-values are asymptotically second-order accurate in the large sample theory of smooth boundary surfaces of regions, and they are also justified for nonsmooth surfaces such as polyhedral cones. Moreover, the p-values are asymptotically equivalent to those of the iterated bootstrap but with less computation.

Speaker 4: Thong Pham (30 mins)

Title: Some recent progress in modeling preferential attachment of growing complex networks

Abstract: Preferential attachment (PA) is a network growth mechanism commonly invoked to explain the emergence of those heavy-tailed degree distributions characteristic of growing network representations of diverse real-world phenomena. In this talk, I will review some of our recent PA-related works, including a new estimation method for the nonparametric PA function from one single snapshot and a new condition for Bose-Einstein condensation in complex networks.